"STRUCTURE"/"ACTION" CONTINGENCIES

AND THE MODEL OF PARALLEL DISTRIBUTED PROCESSING[1]

Journal for the Theory of Social Behaviour 23 (1993) 47-77

preprint version

Loet Leydesdorff

Department of Science Dynamics

Nieuwe Achtergracht 166

1018 WV AMSTERDAM

The Netherlands

loet@leydesdorff.net; http://www.leydesdorff.net

By considering actors in a network as parallel distributed processors, a cybernetic and dynamic model for "structure"/"action" contingency relations can be developed. This model will be discussed in relation to four relevant sociological theories: the formal network analysis of action, Giddens' structuration theory, the micro-situational and phenomenological approaches, and Parsonian structural-functionalism. Parallel distributed processing has in common with Luhmann's sociology that the phenomena are analyzed as the result not only of a differentiated system, but also of different systems with specific operations. Although cybernetic control remains, the new model allows, among other things, for degrees of self-organization ("freedom") in each system. Additionally, the model enables us to use existing algorithms of artificial intelligence and the mathematical theory of communication for the analysis of empirical problems.

Introduction

Crucial issues in the social sciences like "change and continuity," "innovation in relation to existing market structures," or "the effects and effectivity of policies," have in common that they address the enabling and constraining function of structure upon action, and the reproduction and reshaping of structure by (aggregates of) action. Therefore, the study of structure/action contingencies has become a central issue in much of modern sociology (e.g., Giddens 1979; Knorr-Cetina and Cicourel 1981; Burt 1982).

On the one hand, in formal network analysis researchers have focused on how structure is composed in terms of relations and positions. However, even the most advanced methodologies in this respect (e.g., Burt 1982), in my opinion, contain a discrepancy between a dynamic conceptualization of structure and an essentially static (multi-variate) methodology, and have too easily equated structure with "eigenstructure" in aggregates of action.

Others (e.g., Giddens 1979) have mainly focussed on the dynamic aspects of the structure/action contingency, and have distinguished more clearly between action, the results of aggregates of action, and driving forces. The advantage of this model is that it allows one to focus on micro-episodes while "bracketing" the wider contexts. Authors who have studied action in relation to local contexts have gained a lot of dynamics in their analysis (also in reaction to the normative aspects of Parsonian theorizing), but they have paid a price in terms of losing the possibility of raising questions about dynamic control: why do actors act? The structural explanation tends to be replaced by a "situational" one (e.g., Knorr-Cetina and Cicourel 1981).

The quest for a model in which the structure/action problem is addressed in a manner that is both dynamic and cybernetic will bring us to Luhmann's (1984) proposal to define society as the communication network that is added to the actors as the nodes. This reformulation of Parsons' systems theory in terms of empirically observable and analytically distinguishable systems additionally hypothesizes the self-organizing, and in this sense operationally independent character of each system. I shall argue that Luhmann's theory can be operationalized in terms of models for "parallel and distributed computing." Each actor is then considered as a processor which takes care of its own actions and reactions to the social system according to its own program (e.g., a self-referential loop). The network of links differs in nature and in operation from the systems which carry the network physically. Actions are also communications within the system of links.

The network is a virtual system, since its memory function is physically located at the nodes. This system operates in terms of actions, and is therefore structurally coupled to actors. Structurally coupled systems set dynamic boundary conditions for each other: they interact in the (observable) events. The observable events are relevant to the various connected systems. In addition to the possibility of defining the nodes as processors with specific input/output relations in the network at each moment in time, the parallel distributed processing model enables us to specify relations which the node(s) and the network(s) may develop over time. The expected relations between change in structure and action can be specified in terms of operational programs in the respective systems of reference (cf. Rumelhart et al. 1986).

This result must be given a further theoretical interpretation. For this purpose, I shall first discuss four prevailing models of "structure"/"action" contingencies in sociology. I argue that the model of parallel distributed processing for "structure"/"action" contingencies improves on each of these four models. Additionally, the new model will help us to understand the relative positions of the other models, and thus allow us to specify the conditions under which empirical results from these theoretical traditions can be made relevant.

Four existing models of "structure"/"action" contingencies

In her introduction to an overview of studies addressing structure/action dichotomies, Knorr (1981: 25f.) distinguished two major hypotheses about the relations between macro- and micro-phenomena, namely (i) the "aggregation hypothesis" and (ii) the "hypothesis of unintended consequences." The "aggregation hypothesis" leads to questions concerning the (multi-variate) composition of the network, while the "hypothesis of unintended consequences" points to the dynamic effects of changes in the network for other reference systems.

As noted, the multi-variate analysis of network structures is well elaborated in formal network analysis. For the elaboration of the "hypothesis of unintended consequences," Knorr (1981: 27) pointed to the work of notably Giddens (1979), who argued that:

"(s)ocial systems appear to exist and to be structured only 'in and through' their reproduction in micro-social interactions which are in turn limited and modalized through the unintended consequences of previous and parallel social action."

However, in the same volume, Giddens (1981: 167) stated that his model was intended primarily to offer a specific solution to the gap between two other theories, viz. action theory and institutional analysis. These theories had arisen historically from the situation in post-war, and especially American sociology: while originating from the same roots (Weber, Durkheim, Schütz), on the one hand, symbolic interactionism had developed methods to understand ("Verstehen") human action and interaction; while on the other hand, systems theory and functionalism had developed the macro-perspective. As Giddens (1981: 167f.) put it:

"One might perhaps suppose that the dualism between action and institutional analysis-- (...)-- is simply an expression of two perspectives in social analysis: the micro- and macro-sociological perspectives. Indeed, I think this is a view either explicitly adopted by many authors, or implicitly assumed to be the case. (...) The two traditions of thought, of course, have been in some competition with one another; by and large, however, each seems to have respected the domain of the other. The result has been a sort of mutual accommodation, organized around a division of labour between micro- and macrosociological analysis."

Therefore, in addition to the aggregation model and the specific synthesis which Giddens' "structuration theory" presents to this historical dilemma (e.g., Giddens 1979), two underlying models of structure/action contingencies have to be discussed, namely: symbolic interactionism and other "situationalist" approaches on the one hand, and on the other, sociological systems theory, which in one way or another is mostly rooted in Parsons' structuralist-fuctionalism.

a. The Aggregation Hypothesis

In the network analysis tradition it is assumed that "structure" can be analyzed in terms of aggregated action patterns as they are contained in network data. The suggestion that one is thus studying "structure" is reinforced by the methodological terminology of "structural analysis" and "structural equivalence". Multi-variate analysis of the aggregate of "action" in a network, however, provides us with an instantaneous view of "structure" in the network data (e.g., "eigenstructure"). The problem of relating this static picture to the time series perspective has remained yet unsolved in formal network analysis.

|

Figure 1 The Structuralist Model (Burt, 1982, p. 9) |

In his seminal study, Burt (1982: 9) presented his general model as in Figure 1. While the loop suggests a dynamic feedback, in methodological terms the model is yet static: it is a momentary loop, and not a spiral! If I extend it to a spiral with time as a separate dimension, it takes the shape of Figure 2. However, this is a rather different model: in Burt's model structure has to be explained in terms of (aggregates and patterns of) action, but structure in Figure 2 has an additional incoming arrow from structure at a previous moment. "Eigenstructure" in the data within a network at each moment in time should therefore be distinguished from the structure of the system maintained in its relation to relevant environments. With the model of Figure 1 one can study only the relations between various aggregates of actions, and therefore it was only a special case of multi-variate analysis of this data; but it did not yet touch the core questions concerning the dynamics of "structure"/"action" contingencies.

|

Figure 2 A dynamic extension of the structuralist model of Figure 1 |

The extension from Figure 1 to Figure 2, i.e., the explicit introduction of the time axis, is not only methodologically fruitful (cf. Leydesdorff 1991), but also theoretically meaningful. The point here is that the dynamic conceptualization gives us a feel for the two self-referential loops which interact in the reproduction of structure by action: on the one hand, the feedback for actions which may lead to new actions (e.g., in learning), and on the other, the possibility for structure to be reproduced and reshaped.

b. The Hypothesis of "Unintended Consequences"

One cannot specify how the two heads of the incoming arrows for structure in Figure 2 will interact in the reproduction of structure without a theory about the dynamic development of structure/action contingency relations. Giddens (1979 and 1984) identified this problem in his structuration theory as the crucial problem of "the duality of structure."

Giddens, however, redefined "structure" as the "rules and resources" upon which actors can draw: it is only a "virtual" frame of reference for action, which exists analytically "outside of time-space" (Giddens 1981: 171f.). According to Giddens (1984), social structures exist in social reality only by implication, i.e., in their "instantiation" in the knowledgeable activities of situated actors. Consequently, structures do not structure action, they only enable and constrain ("structurate") it. (The singular term "structure" is sometimes reserved for a mediating variable which is attributed to actors, such as "memory traces.")

|

Figure 3 A schematic depiction of Giddens’ structuration model |

This definition of structure "outside of time-space" enabled Giddens to distinguish clearly between structure and the aggregated outcome of action (in social time and space), which is then labeled a "system" (e.g., Giddens 1981: 169). We can schematically depict Giddens' "structuration theory of action," analogously to Figure 1, as follows:

Or, when we again add a time horizon:

|

Figure 4 The dynamic extension of the model of Figure 3 |

The problem obviously remains the specification of how the circle can be closed, i.e., how structure is reproduced in relation to aggregates of action that exhibit "systemness." In Giddens' structuration theory, "the duality of structure" is this recursive operation of structure upon aggregates of action (e.g., Giddens 1984: 374).

Since the duality of structure is an attribute of structure, however virtual this system may be, Giddens should have proceeded to specify what it means when a structure is virtual, and how the duality which is ascribed to it operates in this virtual reality. Giddens, however, did not positively operationalize structure; he only specified it in negative terms, e.g., as an "absent set of differences" (Giddens 1979: 64), and in terms of its consequences for the action system. He suggested that the further elaboration of a theory of "structure" (in his sense of the word, i.e., of cultural rules and resources) would imply a return to systems theory (cf. Giddens 1979: 73-6), since one would have to hypothesize entities which remain "virtual" in social action. According to Giddens (1981: 172f.):

"(...) 'social reproduction' (...) has itself to be explained in terms of the structurally bounded and contingently applied knowledgeability of social actors. It is worth emphasizing this, not merely in respect to criticizing orthodox functionalism, but also in regard of the not infrequent tendency of Marxist authors to suppose that 'social reproduction' has magical explanatory properties--as if merely to invoke the term is to explain something."

The fact, however, that some authors invoke structural mechanisms without sufficient ex ante specification of the relevant reference systems (see also Giddens 1979: 111 ff.) is a methodological argument, but not sufficient evidence against the (virtual) existence of these systems, nor against the possibility of specifying their operation. The operation of a virtual system can have empirical consequences for the system with respect to which it remains virtual. In the case of the effects of the virtual structure upon historical action, Giddens acknowledges this as "structuration."[2]

In order to prevent any specification of structure "outside time and space" in empirical research, Giddens recommends as a method that "structure" be described only historically and contextually, i.e., operationalized in specific historical instances (cf. Giddens 1984: 304). The mutual contingency of structure and action is then to be studied by "bracketing"--in the phenomenological sense of epoché (Giddens 1979: 81)--the institutional dimension when the analysis is at the level of strategic conduct; and vice versa, the latter has to be bracketed when one analyzes the former. So, the two perspectives can be developed as different views of the same matter, which together give a fuller insight into the mutual contingencies.

By shifting to the methodological level, Giddens has, however, unfortunately bracketed also the substantive question concerning the duality of structure, as he is himself aware (e.g., Giddens 1979: 95). Structuration theory contains all the structural elements needed for the parallel distributed processing model, but for methodological reasons, it denies the possibility of specifying the operation of "structure"; moreover, Giddens refuses to allow its substance to be made the subject of systematic theorizing (see, for a strongly worded rejection of any attempt in this direction, e.g., Giddens 1984: xxxvii).

Questions like why actors act, or how the aggregate of actions will condition different actions at a later stage, or what will be the precise analytical position of the third arrow (in Figure 3) which closes the loop, remain unanswerable without the specification of how structure operates. Without such a theory, one can only analyze social action with reference to the incoming and outgoing arrows of action, i.e., with reference to structuration or aggregation, but not with reference to the duality of social structure itself.

c. Symbolic Interactionism and the Situational Approach

While in Giddens' model there are "rules and resources" that have a specific meaning for localized actors, the micro-constructivists are not interested in the specification of "structure," but in the methodology of taking actors and their interactions as units of analysis. In his authoritative study of symbolic interactionism, Blumer (1969: 8) stated:

"The importance lies in the fact that social interaction is a process that forms human conduct instead of being merely a means or a setting for the expression or release of human conduct."

Blumer placed the roots of this tradition in G. H. Mead's reformulation of the self as the result of a process of social interaction (see Mead 1934: 26f.). The communicative structure pervades the action system. Society, as Cooley (1902) once argued, exists inside the individual in the form of language and thought.

The basic unit of analysis in any interactionist account is the joint act--the interactional episode (Lindesmith et al. 1975: 4). The interactional episode is part of the larger society. The latter is, however, consistently treated as the result of the interactions. With other micro-constructivists, Knorr (1981: 27) argued that the "situational approach" is the road sociology must take for methodological reasons, since:

"(...) unlike the natural sciences the social sciences cannot hope to get to know the macro-order conceived in terms of emergent properties: they are methodologically bound to draw upon member's knowledge and accounts (...)"

Although the focus is on "knowledge and accounts," i.e. on elements of communication, the methodological procedure is necessarily confined to the study of these localizable interactions and their aggregations. As Blumer (1969: 58) stated:

"The concatenation of such actions taking place at the different points constitute the organization of the given molar unit of large-scale area. (...) Instead of accounting for the activity of the organization and its parts in terms of organizational principles or system principles, it seeks explanation in the way the participants define, interpret, and meet the situations at their respective points. The linking together of this knowledge of concatenated actions yields a picture of the organized complex."

Note the electro-technical metaphor: the network is not considered as a system itself, but rather pictured as a complex concatenation ("wiring") of the components. Consequently, there is no hierarchy but heterarchy in the network. One can understand the whole more fully only by taking the situations apart and by studying them separately.

In my opinion, the focus on specific episodes in micro-constructivism and symbolic interactionism has resulted in a richness of understanding which cannot easily be brought into the systems perspective (cf. Knorr-Cetina and Cicourel 1981). These authors have substantiated their critique of systems approaches that in order to be useful for empirical research, a model should be able to account for the specificities of localized action and interaction. However, the situationalist approach fails us if we wish to understand how and why interactions are connected. Some authors in this tradition have specified control as, for example, emergent "alignment" (e.g., Fujimura 1987); but the conditions governing such control cannot be specified theoretically within this framework.

d. Systems Theory in Sociology

A central question to be raised on the basis of the above discussion is whether action and structure are to be considered as external to each other or as contained within one interaction. We have seen how the dynamic extension of Burt's model gave us a model which contained two loops. In principle, Giddens had also two axes in his model, but by the methodology of "bracketing" they were juxtaposed to each other. Furthermore, since he did not specify the relations at the structural level, his theory was methodologically constrained to specifying empirical research only in relation to the action system, and its incoming and outgoing arrows.

Symbolic interactionism and Parsonian structural-functionalism can be considered as two grandiose attempts to develop sociology as the study of one core operation of society. While Parsons defined structure within "the unit of action", Blumer defined action "within the unit of interaction." Interaction also provides the mechanism for how social worlds are constructed, but in Parsons' model action serves as the mechanism of control for (structural) integration (cf. Grathoff 1978). Parsons made the cybernetic model a crucial element of his theory. The major research question, however, remained the traditional problem of how social order is maintained (cf. Hobbes), i.e. the integration of action into social structure. Parsons' crucial innovation was to reverse the arrow: action is not integrated into a given social structure, but social structure is integrated into each unit of action.

Parsons (1952) emphasized that this specific cybernetic model for sociology finds its origins in the convergence of Durkheim's insight that "the individual, as a member of society, is not wholly free to make his own decisions but is in some sense 'constrained' to accept the orientations common to the society of which he is a member" with "Freud's discovery of the internalization of moral values as an essential part of the structure of the personality." It is well known that this vision led Parsons to develop a complex conceptual apparatus which, at those places where it is elaborated into categories susceptible to empirical research, is better equipped to explain social stability than social change (cf. Weinstein and Platt 1973: 32).

The model is static, since the influx of information into the system from the environment is no longer specifiable. If structure is always integrated into action, there is no environment left, and therefore no systematic position for feedback by action(s) over time can be defined. Luhmann (1977: 65) argued that Parsons' theory can consequently be seen as the most systematic attempt to understand the relation between the individual and society as one which is internal to the system.

Luhmann's sociology and the model of parallel and distributed processing

Luhmann (1984) proposed to reformulate the relation between the individual and society in terms of two types of systems which operate in each other's environments, and over time. Society is then defined as a communication system among individuals. The two systems are operationally independent, but they interact in events that can then be labeled actions with reference to actors, and communications with reference to the network.

Independently of whether or not parallel and distributed computing is technically feasible, or should only be considered as a model for virtual computer operations in a large machine, this model provides us with an opportunity to operationalize the external cybernetic relations which are implied in Luhmann's sociology. Note that parallel distributed programming was originally introduced as a means to divide a complex task into smaller parts which can then be run concurrently on various computers which are held together in one environment. In social theory, the concept of social division of labour has been basic to the concept of functional differentiation (cf. Durkheim 1930; Habermas 1981). Social division of labour implies the decomposition of tasks into components which can be done simultaneously, i.e., next to each other, and the subsequent integration of the results. In a functionally differentiated social system there is no central coordination, but the various processes develop according to their own programs, and in relation to one another. Thus, in addition to relations between actors and the network, we shall have to specify how the network can obtain (and maintain) a complex structure.

How is information generated in a communication system? When does a communication network develop according to its own logic? How can one follow Luhmann when he argues that the social communication system is not only operationally closed, but even self-organizing ("autopoietic"), analogously to the self-organizing character of biological and psychological systems (cf. Maturana and Varéla 1980)? I shall now turn to the question of how the parallel and distributed computer model enables us to reformulate these claims as empirical hypotheses.

The operational independence of the social system

The usual way to argue for the existence of a social system as a system sui generis has been by using the argument of "double contingency" in interaction (cf. Parsons and Shils 1951: 3-39; Parsons 1968; and Luhmann 1984: 148 ff.). However, the "double contingency" of Ego and Alter for each other is a necessary, but not a sufficient condition for the existence of a communication system: this "double contingency" has consequences for the awareness and behaviour of the actors involved, but it does not have to imply "communication" as part of an operationally closed and different system that could then be considered as an external source of variance.

How can one demonstrate the operational independence of the network system? While one can easily understand that society is a relevant environment for each individual (psychological) system, the operational independence of the social system implies the converse notion that each individual system is an environment for the social system. This idea is counter-intuitive, and therefore requires further warranty.

One can argue for the existence of the network as a distinct system by using the parallel and distributed computer model. In the mathematical theory of communication (Shannon 1948), the expected information content of the system is by definition equal to the uncertainty within the system. As long as an actor processes on its own, it (as a system) can only attribute its uncertainty to itself. However, as soon as two actors communicate, neither of them can internally generate the information necessary to exclude the possibility that the uncertainty in the communication has originated from (noise in) the communication. The uncertainty can be attributed to each of them or to the communication. Therefore, one has to assume that the communication system itself is able to generate uncertainty by operating.

The advantage of the specification of the communication system as operationally independent, in comparison with the argument from the double contingency of relations among actors, is that it defines the communication system as based in uncertainty which cannot be reduced to the awareness and perceptions of the actors involved.[3] Since this part of the uncertainty in the communication originates independently from the intentions of the actors involved, one has to specify how it can be generated by the communication system.

The Network Networks, and the Actor Acts

In common-sense language, one is accustomed to thinking of actors and networks as intrinsically related: networks operate because actors act within them, and actors operate through networks. This language may be adequate to describe social reality at face-value; but in terms of an explanatory model, a description of what actually happened as an outcome in social reality informs us only about the (dependent) results which are to be explained in terms of the underlying processes. If one is additionally allowed to assume that the network networks, and the actor acts, one may be able to specify the conditions under which actors network, and the networks act, i.e., instances of which one was able to give only a description ex post in common-sense language. However, in order to do so, one has to give a meaning to the non-common-sensical expression that "the network networks."

First, we have to understand that two systems can be structurally coupled while each performs its own operation. Networks and actors are structurally coupled: the actors are the nodes of the network, and the network of links is necessary for their functioning, and probably even their survival. That two systems can be structurally coupled, and yet each perform a different operation, can nowadays be more easily understood because of the widespread diffusion of the personal computer. For the user of a word processor, the operating system of the PC remains "virtual." There is no problem in sharing the processor with another user, who may for example be using a database manager, so long as the operating system takes care that we each get our own messages back to our respective screens. Obviously, there are more systems operating in this situation; their operations interact, but remain external to one another.

However, in the personal computer there is no mutual dependency: the operating system can continue to operate after a user leaves the word processor. A social system is much more complex than a personal computer. If one actor stops to function, the network will have to change in structural, i.e. topographical, terms, but it will usually continue to function. Although each actor is structurally coupled to the network, the network does not require his/her participation for its operation. For example, patterns can be maintained in the network over time, even when the actors have been replaced. Each change in the network requires action at local nodes, but the system of reference for change is the network, and not the actors involved. Logically, the network therefore can be considered as running its own programme. It performs its own self-referential loops.

Before we address the question of the mechanisms of coupling and interaction between two systems, let us reflect a moment on the meaning of the concept that "the network networks." In terms of hardware, the network does not network otherwise than through the actions of actors. Any memory attributed to the network is virtual memory which is physically located in actors. One actor or another has to make the noises which the network can use as the basis for a communication process. The actor is also structurally a node in the network: he/she may be addressed, may be expected to act (e.g., to respond), etc.; but whether he/she does so remains up to him/her: the actor acts. The concept of external operation sets the systems "free:" insofar as they do not interact, they are only conditioned by one another.

Among the various actions an actor can perform, going to the network is a specific one; the actors have also discretionary room. However, is the converse also true: can the network do something other than address an actor? Yes, and this is an essential step which I owe to Luhmann: the network can grow, and become more complex by using actions at the local nodes as disturbances. Since within a communication system there may be communications about communications in the network, this system can develop higher order complexities like differentiation and reflexivity. It cannot do this without action at some local nodes, but feedback loops in the communication system can stabilize structures within the network. This stabilization occurs at the level of the communication system, and it cannot be attributed to specific nodes. The events are then the loci of the recursive operation of structure upon the information previously contained within the network. Although the network is structurally coupled with, and thus contingent upon actions by actors, its operation is different from being a simple aggregate of actions at each moment in time. Otherwise similar actions can then have different effects in terms of the self-referential development of the network.

In philosophical terms, one may consider the network as ultimately generated from communications (by actors), but in any empirical design and for any historical period one has to assume that the network already has a structure. Remember, however, that the network was defined as the communication system among people. Since this communication network is distributed by its nature, its structure contains uncertainty. In the reconstruction, i.e., when accessed by a local actor, one should therefore never reify this structure: any delineation of the network remains an expectation on the basis of the then available information. However, on the basis of such an hypothesis, the question of whether the network under study was segmented, stratified, functionally differentiated, reflexive and/or self-organizing can be analyzed empirically as an historical variable (cf. Teubner 1987).

The Role of the Network

How can the parallel distributed nodes be integrated in a network which is nothing more than a system of links? What difference does the existence of this network make for the operation at the nodes? Here, Parsons and Luhmann have extensively elaborated the concept of "interpenetration." I return to this issue in the next section, but I will first suggest how processors can operate independently in a network, and yet be constrained by it, by quoting a model for belief updates from Pearl (1988: 145f.). (The problem is known in artificial intelligence as the graph coloring problem.)

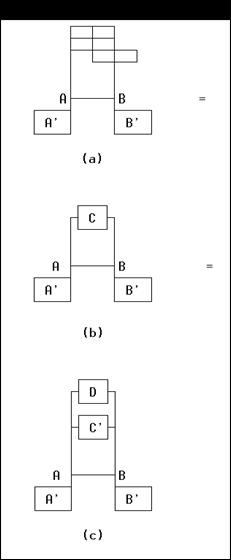

|

Figure 5 The graph colouring problem |

Consider the graphs depicted in Figure 5. Figure 5a shows the initial state of the system: all nodes have the same value. However, each node has an internal program such that it cannot assume the same value as any of its neighbours. When a node perceives equality it assumes a new value; but let us assume that there are only three values {1,2,3}.

Figure 5b shows the configuration after the nodes are activated in the order A, B, C, D: a stable solution is established once C selects the value 2. If, instead, the activation schedule happens to be A, C, B, D, and if C selects the value 3, a deadlock occurs (Fig. 5c): B cannot find a value different from its neighbours. One way out of such a deadlock is to instruct each processor to change its value arbitrarily if no better one can be found. Indeed, if B changes its value to 2 (temporarily conflicting with A, as in Fig. 5d), it forces A to choose the value 3, thus realizing a global solution (Fig. 5e). However, there is no guarantee that a global solution will be found by means of repeated local relaxations, even if such a solution exists.[4]

This model system demonstrates that the network architecture constrains the range of locally possible actions in each instance. The assumption that each node in the network has its own processor, and runs according to its own program, is then useful for the simulation of action/structure contingencies. Given the programmes which run in the processors at the nodes, and the structure of the overall network, social situations (e.g., the deadlock) will emerge.

The major attraction of the parallel distributed processing model for artificial intelligence has been the local character of processes of change. Whether or not a node has to take action depends only on its local situation. This result can be generalized: any inference rule in the form "If premise A, then action B" acts as a local triggering mechanism, i.e., it grants a license to initiate action whenever the premise A is true (or the event A happens) in the network K, regardless of the other information that K contains (Pearl 1988: 147). How the new information will be propagated through the network depends on the operation of each node through which the communication has to pass. Thus, propagation and backtracking are also determined locally.

The advantage of this model for modern sociology is that it is cybernetic, and yet complies with the criterion (derived above) that a model should be able to account for the specificities of localized interactions: each node runs according to its own programme, and is in that sense "free" to follow its own programme, while it is also conditioned by its environment. The operation of the actor system may have to be defined psychologically, but the shape and the operation of the network itself are also crucial for the future functioning of each of the processors at its nodes. Since environments have to be specified as relations with other systems, all questions concerning interactions are now necessarily empirical.

The systems determine each other in the interaction, but the significance of each disturbance is controlled by each system's own programme. We can now specify how the network and the nodes may "irritate" each other (Luhmann) as perturbations in each other's environments which can be used for further differentiation, and thus for the maintenance of structure on both sides (cf. Leydesdorff 1992b). In contrast to human agency, however, the network has no central memory (cf. Latour 1988): the network distributes the chances for each of the nodes, while the nodes constitute the environment from which the network is irritated when chances are taken or not. What these irritations mean for the further development of the network, is determined with reference to the previous stage(s) of this system, and according to the logic contained in its structure and history. An analyst can again only hypothesize this logic reflexively.

Interpenetration

The issue of the relations between individuals and society, actors and structure, was made a focus of theorizing in sociological systems theory mainly under the heading of "interpenetration" (cf. Parsons 1964; Luhmann 1977, 1978, and 1984; Jensen 1978).[5] By using the model developed here, we can further specify actor/structure contingencies.

First, we have the notion of structural coupling: the nodes of the network represent the actors as addresses. Although each actor remains to some extent free with respect to position in the network, an actor is relationally embedded in the network at any position, and therefore necessarily constrained by it. Although these relations may change over time, the network sets the boundary conditions for the actors in it at each moment in time.

Conversely, the actors are also constitutive of the network. They define its boundaries at the structural level. In addition to these primary, mutually conditioning relations between the action systems and the network structure at each moment in time, there are more complex dynamic interactions. The actor produces an output which is a message for the network. The information is packaged as a message in the network, and this package can be deconstructed at a receiving end. However, when the receiving actor does not take the message, the information remains in the network, unless the network has a mechanism to destroy the information internally. Each action makes a difference to the network, and the consequent differentiation has an expected information content. The two systems of action and structure continuously interpenetrate when they operate; and they always have to operate in order to reproduce themselves. The systems constitute and reproduce themselves by operating, and are therefore dynamic by their nature.

Actors may also act without intending to communicate. An act which is not intended as a communication may be perceived by another actor, and either be brought into the communication system or not. In some settings (for example, in psychology or phenomenological sociology) one may wish to question whether the intention/perception relation is not itself an act of communication. In that case one has to redefine (and notably differentiate) the operation of the communication system: one attributes an expected information value in a dimension, which was previously understood as a default (cf. Bertsekas and Tsitsiklis 1989: 595).

The conditions under which developments in one system may cause structural change in coupled systems can now be specified. Redefinition of the operation of each of two interpenetrating systems may become necessary whenever: (1) the system has changed in terms of its operation, (2) a system in its environment has done so, or (3) a specific lock-in among systems has occurred (cf. Arthur 1988). It goes beyond the limits of this paper to discuss these instances in more detail, but the crucial point is that the various forms of path-dependent transitions and lock-ins cannot be reversed in self-referential systems without leaving traces. This makes their history irreversible (cf. Leydesdorff 1992a). Each system has different systems in its environment, each of which may go through unlikely transitions, autopoietically or in relation to their environments. An actor is, for example, not only a node in a communication system, but he/she has a physical body which operates biologically (and autopoietically) as well. Changes may come from all sides; each parallel processor is structurally dependent on its network components, and the network is itself a complex system which generates change internally, and in reaction to change in its environments.

|

Figure 6 Various substitutions for a complex network in relation to one of its links |

The Local and the Global Network

Amidst all this complexity, one of the major advantages of the parallel distributed computing model--as noted, it was the major reason for its application in artificial intelligence--is the locality of events in it, and the consequent possibility of propagating the update only as far as the effects can be registered. In Figure 5, I gave an example of how the locality of the update works for the nodes, and in this section I elaborate on how locality ("situation") can be studied in terms of links.

Figure 6a represents a network with various nodes. The focus is on nodes A and B: they are the network addresses of the two local processors A' and B' in the network. A and B are (in this case) connected by a direct link, and parallel to this link through the remainder of the network. Note that however complex the remainder of the network may be, it can be represented as a remainder which is wired in parallel, and therefore it can be replaced by a (complex) parallel link C between two localizable nodes. (See also Figure 6b.)

By using the so-called theorem of Thévenin, an electro-technical engineer would be able to compute the complex impedance C as a single link which could substitute for this complex part of the network. In a social network, one can analogously replace the remainder of the network with the sum total of the uncertainty of all of its parts. Subsequently, one may decompose this sum total C again into C' (< C) and D, using other grouping rules. (See Figure 6c.) C, C', and D can be considered as localized processors that are performing their own transformations on the signals carried over their respective lines. For example, one may think of D as a processor which stabilizes the link by damping deviations from a preset value, while C' modulates variance. Or, in terms of social organization, one may think of the processing of communications by a control system in relation to the communications in a production system.

The advantage of the replacement of the complex subnetwork with a (set of) processor(s) is again that empirical questions can be studied locally, and that we therefore are able to specify what the relevant environments can be, as we were able to specify the interactions for the problem of interpenetration above. This conclusion has important consequences for the elaboration of the model in relation to both micro-sociological analysis and to more macro-sociological analysis.

First, in relation to micro-sociological analysis, the model strongly legitimates the phenomenological bracketing of context variables in the study of local networks of communication. The remainder of the network can be understood as a large uncertainty (C) which is wired in parallel, and is therefore not necessarily of much concern so long as no significant changes take place in the micro-setting. Micro-settings may thus be compared ceteris paribus.

Secondly, in relation to macro-sociological analysis, and particularly in relation to notions of functional differentiation in sociological systems theory, we may now consider specific parts as localizable densities of communications in the network. These densities may undergo highly unlikely transitions which can change the very operation of the network within them, and thereby their function for other parts of the network. A classical example is when we no longer have to bargain on the market about the exchange rate between two products, but can just pay for what we wish to buy. Money has then replaced the simple operation of the social network as a symbolic generalized medium of communication (Parsons); the economy is accordingly a functionally differentiated part of society. The morphogenesis of the differentiated, i.e. second order, media is contingent upon the operation of the network (cf. Luhmann 1990: 188).

Local densities may in turn contain specific areas of even higher or other density (like disciplines within the sciences; cf. Luhmann 1990). They can either be studied from the outside as one link of the network performing an operation (e.g., the transformation of input into output), or the black box can be opened for a detailed analysis of the specificity of the operation taking place within a given area. Since the same events will have different meanings for different systems of reference, the specification of the latter becomes crucial in empirical research. The observed events should be analyzed as the interactions among (hypothesized) constructs; these include both tangible actors and (virtual) social contexts.

Hierarchy and Heterarchy in the Network

In the example which was given above, the processor which replaces the subnetwork (in this case, D in Fig. 6c) performed a control function. A control system functions like the thermostat of a central heating system which is set at a standard value for room temperature; consequentially, the operation is goal-referential to this standard temperature that is to be maintained in the other system. In general, each link and each node in a network can be considered as a processor, and then be analyzed in terms of both the self-reference and the external references in the program that it runs, and in terms of whether these operations are recursive or not. For example, a system can be defined as self-organizing insofar as it is self-referentially able to control its external references by using recursive loops during its operation.

The possibility to replace also parts of the network in relation to other parts with a processor sheds new light on the problem of hierarchies among social systems. All parts of the network can be rewritten as parallel connections which may contain processors in relation to each other. Therefore, in terms of the network, they are all "next to each other"; there is heterarchy among all these processors. Hierarchy is generated only when processors exercise a control function on a part of the network. Hierarchy can therefore always be defined in terms of programmes of localizable processors: some parts of the network can be programmed so that they control other parts of it.

Because of this local character of the generation of hierarchy, the propagation of its effects can also be determined by each processor which is connected to this instance. All control is therefore contingent to the situation, however broadly the situation may be defined. Paradoxically, the extension of the originally very strongly hierarchized cybernetic model (cf. Parsons) has brought us to a formulation which cannot accept any a priori concerning hierarchies. The reason is that we not only have subsystems which have to integrate normative order, but also self-referential ones which have to make their own decisions about whether or not to accept the control which is applied to them through the network.

Control functions can also be stabilized as programs in specific (sets of) links in the network. While they may function in the network at large, they can be localized in terms of the structure of the network. Since control is local, it can be effectively counteracted by other parts of the network. It is therefore no longer considered inherent to structure that certain subsystems (e.g., the value system) assume control; but this becomes an empirical question. For example, we can raise the question of how much money we would have to pay to bribe the judge. Hierarchy is an effect of local processing in an otherwise heterarchical network.

Heuristic functions of the model

The positive formulation of notions like control and hierarchy as relations among systems can stimulate the design of empirical research. In the sociology of science, for example, one usually wrestles with issues like the relations between "cognitive tasks" and "social organization" (cf. Whitley 1984). How is a research group as a part of social structure constrained and enabled to succeed in relation to the current state of the discipline (with its "cognitive structure")? How and when may the latter be reshaped by the former's activities? Obviously, similar issues can be raised in all sociological questions which address issues of change and continuity.

Let me pursue this example to show the strength of the parallel and distributed computer model for designing such a problem. First, one has to raise the question of the operation of the unit of analysis: what does the research group do when it researches? Secondly: how is this system locked into its relevant environments? What will these networks process as a signal from the research group? How do we define the operation of the larger network? For example, one can define the operation of the relevant scientific networks in terms of publications, and try to measure their operation scientometrically; or one may define the operation in terms of the personal communications among members of the relevant scientific communities. In the latter case one should use a sociometric technique for the measurement (cf. Leydesdorff and Amsterdamska 1990).

If we now address the question of what might qualitatively improve the performance of the research group under study, we can obviously distinguish between the goals of more publications and of more communications with colleagues, e.g., through organizational functions in professional societies, inviting visitors, etc. We may in effect consider these networks as specific processors, as parallel densities in the relevant network between the research group under study and its colleagues or competitors. For a researcher who is familiar with these two types of environments, it is obvious that each has its own "rules of the game," i.e., each dimension in the relevant networks runs its own program. The specified programs can additionally interact, and one can assess the dependent variable--in this case, the visibility of the research group--in terms of either process and their interaction. What traditionally has been called "factors" should now be specified as processes.

However, in addition to the possibilities for the research group to play with the various signals which it would have to give to the relevant networks in order to enhance its visibility, the group is also doing its own thing, and moreover, it is composed of individual scientists who can conform in varying degrees with this system's goals. Obviously, the group is continuously exposed to the risk of loosing internal cohesion, since each member of the group may self-referentially drift away, and eventually reach a different lock-in (for example, leave the specialty, etc.).

Therefore, the extent to which the group is able to organize its own self-referential loop as a group remains an empirical question. The processors representing the various nodes and links within the group may change their operations over time, and as a consequence the process within the group may undergo a path-dependent transition. Note that a change in the communication process does not necessarily relate to a change in the physical composition of the group (cf. Krohn and Küppers 1989). The group does therefore not have to be consciously aware of irreversible transitions, but it may lose or gain because of its changing position in terms of the signals which it can send to specified networks in its environment.

In summary, change may have emerged from drifting into a lock-in (cf. Arthur 1988), or it may have been produced in the interaction at the level of the network, and initially have gone unnoticed. The risks of historically unnoticed change legitimates a focus on "reflexivity" in sociology. However, this is reflexivity not in a trancendental sense, but empirically, i.e., with reference to the system: what does the system do when it processes? and has this operation remained the same during the processing? If not, do the relevant changes have consequences for the chances offered to those who participate in the communication networks under study?

Algorithms of Parallel and Distributed Computation

In addition to using the model as an heuristic for theoretical specification, the researcher may be able to specify a design so that algorithms from parallel and distributed computation can be applied directly to relevant questions. The algorithmic approach allows us to specify frequencies of operation for all the systems specified in the multi-variate design. Algorithms enable us to study the overhead by communication in relation to computing time, losses or production of redundancy by failure or delay of communication, and relations between network architectures and the degree of possible concurrency of operations. In short, synchronization problems among systems.

For example, if at a large party one has to serve a dinner, one may consider the option of having the guests (sequentially) queue along a counter, or consider each of the tables as a serving system. Then, one may proceed to optimize the numbers of guests in relation to the numbers of dishes to be handled at the same time. The example is perhaps absurd, but it may give an intuitive access to questions which are of relevance when organizing a production system.

If systems are coupled, synchronization and termination problems are particularly prominent. For example, someone at the dinner will have to play the role of sending a so-called marker message to check whether everyone has finished the main dish, before one can begin to serve desserts. Synchronization problems are pervasive in social life, since all systems can be driven by a given system with its frequency, but self-organizing systems also contain an internal clock. A real life example is the well-known fact that the time one has to spend standing in a line when there are several counters does not depend only on the length of the various queues but also on the varying tempers of those who serve at the other end. If social systems are synchronized, it is always possible to specify a synchronization mechanism (cf. Latour 1988: 164).

When one begins to see each individual as living his or her own life, and each social system as containing potentially different clocks, the applications range from synchronization problems in having dinner together to the problem of traffic regulation in different neighbourhoods of a city, or to the question of how disciplinary and specialty structures are reproduced or can be reshaped by research groups. In each case, we have various systems with specific operations that interact in communications, but that are otherwise only conditional for one another (cf. Leydesdorff 1992b). Stabilization means that a system maintains structure along the time axis, and thus operates periodically. By operating, systems can also communicate frequencies in the time dimension (Leydesdorff 1992d). Consequently, there are communication overheads, and spills of resources, i.e., waiting times in interactions among systems. Note that the different systems should not be considered as hierarchically organized aggregates of one another; they can retain their specific identities when interacting with one another if they reproduce structure over time.

"Meaning" at the level of the network

An urgent question for sociology remains whether the network contains only information, or whether it can also generate "meaning." A related question is whether the substance of the information matters for the network, or does the network only contain information in the sense of relative frequency distributions of beeps and bits. Does the network only process messages, or is it also able to operate systematically on the information content of the messages?

A message can only be given meaning if its expected information content can be reconstructed. For example, at the receiving end, social communication requires an actor who is able to receive the message, to deconstruct it with respect to the expected information, and to assess this information reflexively with reference to its own structure. Thus, if a system is able to reflexively position messages, it is able to give the messages meaning; if not, the system can only disturb the content by generating noise.

This issue requires a separate paper, but let me briefly indicate the conditions under which the network can position the messages reflexively. By selecting the information in the specific form in which it can be transmitted, the transmitting system takes the event of the interaction as a message, which is then part of this system's operation. For the network, this message is information, unless this system contains a second dimension for which the information in the first dimension makes a difference. A differentiated communication system, for example, contains this second dimension. The message has then a position within the network, i.e., it has an irreflexive meaning for the network. If the differentiation is maintained over time, this third dimension provides the system with sufficient complexity for changing the meaning of the communication. In social theory, this additional meaning is known as, for example, the situational meaning of a communication.

For example, Luhmann (1990: 209) defined the science system as the functionally differentiated part of the social system in which the information content within messages is checked (at local nodes!) on whether the content is also true. Truth is then a function of the social communication system, and in this sense historical. It operates on communications which are brought under its control by local actors, and thereby feeds back on the possibilities for further communications in this (functionally differentiated) domain. The additional dimension, however, will usually change the meaning of the communication for the actors involved. Note that the additional ("situational") meaning of the communication can again only be hypothesized by actors who are embedded in the system, since the social system remains virtual, and accordingly its dimensions remain latent.

More generally, the part of the network which generates reflexive meaning can be considered analogous to the parts which generate "truth" or "trust" within the network, i.e., as another local processor which is connected parallel to the ongoing communication processes in the social system under consideration. Reflexive meaning feeds back to the further selection of what is relevant ("meaningful") to the system. Note that a communication in a differentiated system can have more than one social meaning (cf. Münch 1988). The crucial point is that these meanings are generated with reference to the network, and can subsequently be reconstructed by the actors involved.

In short, social systems not only contain and process information, but they also contain and develop structures in order to do this. While the network is initially heterarchical, it will historically be structured, and thus contain (local) hierarchies. The parallel computer model does not preclude qualitative historical and sociological research! It offers primarily a model to study structure/action contingencies in which both "structures" and "actors" have to be specified.

Summary and Conclusions

I have argued that by considering actors as parallel processors in a network, a cybernetic and dynamic model for "actor"/"structure" relations can be developed, one which builds on existing theoretical models in sociology, but also improves our understanding of their relative contributions, and in a sense integrates them. First, I showed that dynamic extension of the model for formal network analysis results in the distinction of more than one self-referential loop. The specific solution proposed by those who focus on action while "bracketing" the structural dimensions of the action has led to the predominance of a field metaphor in which certain directions are favoured ("enabled"), while the movement in other directions requires a certain momentum ("constrained"). A field model theoretically precedes a cybernetic model (cf. Hayles 1984). However, the problem with a cybernetic model was that this position was claimed in sociology by the Parsonian tradition.

The more recent extension which notably Luhmann has given to the cybernetic model in sociology makes it possible to develop the parallel computing model, and therewith to introduce the powerful concepts of information theory, systems theory and artificial intelligence which apply to this model. I specified social systems as specific network architectures in which all memory attributed to the networks is virtual, and physically located in the actors. Since the network is distributed, the topography of the network remains uncertain in terms of the actors involved, and communications remain prone to failure. In the theoretical reconstruction, the analyst has therefore to specify the virtual systems and their latent dimensions as hypotheses. As noted, the model extends the factor model in assuming frequencies in the time dimension to be relevant; it extends traditional evolution theory in the distinction between first-order selection and second-order stabilization.

I specified the model in this study mainly at the theoretical level, but I have published some corresponding algorithms elsewhere (Leydesdorff 1992a, 1992b, and 1992c; see, e.g., Rumelhart et al. 1986). However, the theoretical considerations have led me to a number of important conclusions. Let me recapitulate them:

* "Actors act, and networks network." Although the systems interact in a single action, one has to specify the different operations with reference to the relevant systems. The attribution of network operations to actors can easily lead to category mistakes.

* I specified the notion of "structural coupling" for the social system in terms of its distributed and virtual character, and elaborated it for the problem of "interpenetration" in sociology. The "enabling and constraining" role of structure for action can then be specified: (inter-)action is the dynamic equivalent of the covariance among the systems, i.e., the part of the variance in each self-referential system which is determined by the other system. The remaining variance in each system is only conditioned by the other system. In other words, the remaining variance is auto-covariance of a self-referential system (Box & Jenkins 1976).

* The meaning of events can only be specified with reference to a system. Events can have significance for each of the actors and for the network(s) among them in various dimensions. Reflexive systems are also able to position the incoming information. Events may have consequently a (finite!) number of histories attached to them (cf. Mulkay et al. 1983). The delineation of the social network, however, has to remain a hypothesis for an actor in the network, and correspondingly the "situational" meaning(s) which can reflexively be attributed to an event at this level are hypothetical reconstructions in need of empirical corroboration. Note the systematic analogy between the assumption of meaning for other relevant actors and with reference to specified social contexts.

* In the situational analysis, the "bracketing" of the environment of the situation is legitimated using the parallel computing model, since the remainder of the network can be considered as a parallel connection with a large uncertainty. The situational analysis need no longer be so methodologically modest as to remind us that each situation is unique and requires description in itself. In the parallel computing model the situational analysis has a much higher methodological status, since all operations and their effects are necessarily localized. Unless one is warranted to make law-like assumptions, operations can only be studied locally.

* If one wishes to study structure, one should not only specify and study the tangible hardware in all the branches of the network ("the actors and their actions"), but also the software. For example, when Giddens considers "structure" as dual and virtual, he has additionally to specify its duality in terms of virtual spaces and/or times. Since action and structure are structurally coupled, one cannot fully understand the dynamics of the one without understanding the dynamics of the other. Idealization ("bracketing") is thus only of limited value.

* The introduction of an electro-technical view of the network enabled us to discuss parallel wiring within the network. Densities in networks can technically (e.g., statistically) be analysed in terms of dimensions of a multi-dimensional space, and theoretically be understood as parallel circuits with specific functions for the network. The question becomes whether second-order processes along the time dimension can be distinguished in thus delimited densities. Self-referential densities add to the stabilization of structure. Thus, structure should not be understood in terms of instantaneous "eigenstructure," but as a process which results in the maintenance of eigenvalues over time.

* The network is heterarchical by default. Hierarchy is localized, and therefore constructed (programmed into the processor). However, this does not imply that one can necessarily deconstruct it into the actions of actors, since this will only be the case under the condition that the actors have been able to determine completely the variance in this part of the network. In empirical cases, this will usually not be the case. The (partial) continuity in structure refers then to the network as a different system.

In summary, the parallel distributed processing model allows for the importation of results from situational analysis, technical (e.g., statistical) analysis, and organizational analysis, and it allows for the specification of how these different types of analysis can be made relevant for one another, and for the general model. Additionally, it provides us with a set of algorithms which can be applied to the study of social problems when they are (re-)formulated in terms of this model.

NOTES